We can use VBA to retrieve webpages and comb through those pages for data we want. This is known as web scraping.

This post will look at getting data from a single web page. I've written another post that deals with getting data from multiple web pages.

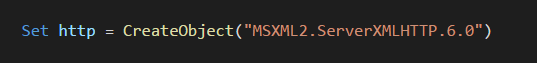

Here I'm going to use ServerXMLHTTP which provides a means to communicate with websites via VBA.

NOTE: ServerXMLHTTP is intended to be used for communication between web servers. Microsoft also provide XMLHTTP which is intended for use by programs (like my VBA) to access websites.

In testing I found XMLHTTP to encounter errors accessing sites where ServerXMLHTTP did not, so I stuck with ServerXMLHTTP.

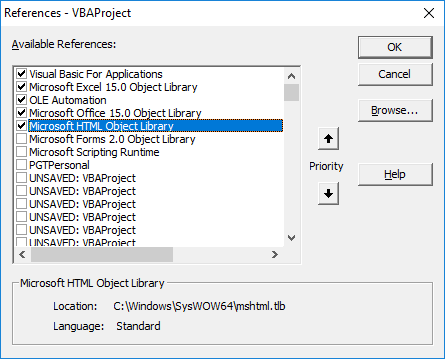

To use either you will need to set a reference in your VBA project.

Web scraping can be frowned upon if it puts too much load onto the web site, but there are legitimate reason for doing it. Just check the web site you are going to use to make sure you aren't violating their terms, and never write code that puts excessive load onto a site.

ServerXMLHTTP

Before you can use ServerXMLHTTP you need to set a reference to it in your VBA project.

Open your VBA editor (the keyboard shortcut is ALT+F11) and with your workbook selected in the VBAProject window, go to Tools -> References.

Look for Microsoft HTML Object Library and check the box beside it. Then click OK.

Extracting Data from a Web Page

Depending on what we want, we may need to dig around in the web page to understand how that page is constructed and locate what we are after.

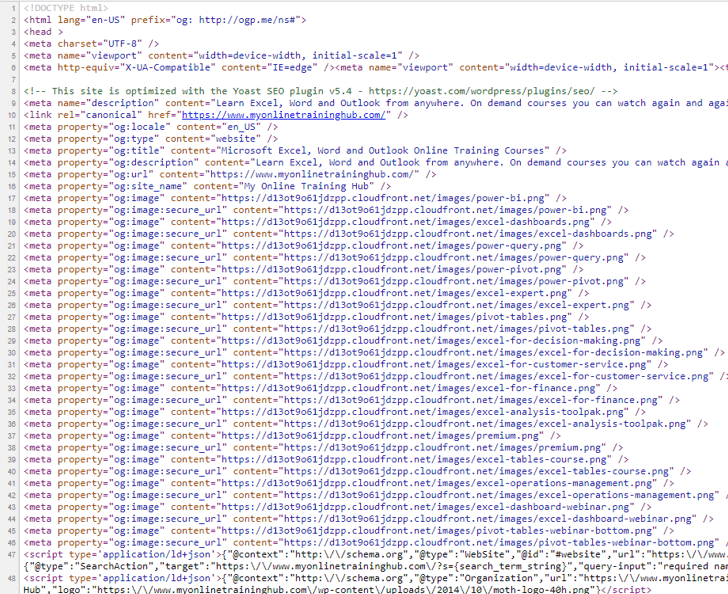

Load a web page in your browser (or just use this one), right click on the page and in the pop-up menu click on 'View Source' (or similar wording).

You'll see something like this

That's just some of the code that makes up our home page.

So how do you find what you want? If it's something buried in the code we have to use the Inspector built in to your browser.

You can see how to do this in Web Scraping Multiple Pages, but we don't need to do that for this example.

Extracting Links

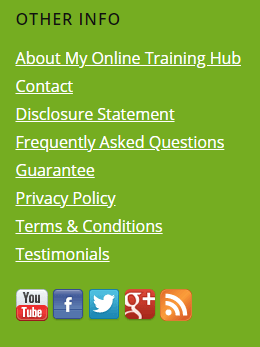

Let's say we want to get the web addresses of the social media presence for a company.

NOTE : in web speak, a web address is referred to as a URL - Uniform Resource Locator.

The URL's for the social media profiles will be links on the web page. If you look at our home page, right at the bottom are links to our YouTube channel, Facebook, Twitter feed, Google + and our RSS feed.

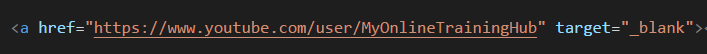

If you hover your mouse over each of these social media icons you'll see in the status bar at the bottom of your browser, the URL, e.g. for our YouTube channel https://www.youtube.com/user/MyOnlineTrainingHub

So to find that channel URL on our home page I could search the page for the string "youtube". Likewise for the other social media platforms I could search for "facebook", "twitter" etc.

Bear in mind that I am assuming the only link to YouTube and the other sites on our home page is to our social media profiles. Searching just for "youtube" could find a link to a YouTube video.

I know that this isn't the case for us, but you need to make sure on the sites you are working on.

Writing the Code

After declaring variables I need to create a ServerXMLHTTP object. I'll use this to request data from a website and check what response it sends.

Download the Example Workbook

The VBA code used in this post is in the example workbook. Download a copy for yourself.

Enter your email address below to download the workbook.

I'm reading a list of URL's from the ActiveSheet starting at cell A22, working my way down column A, and checking each site in turn.

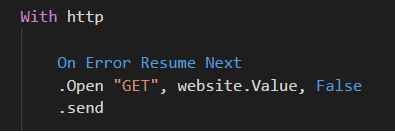

There are two parts to getting a web page. First you must prepare the request using .Open, then you .send the request.

If the .send generates an error, maybe because the URL is badly formatted, you need to handle this so I have turned off default error handling using 'On Error Resume Next'. I turn it on again later in the code.

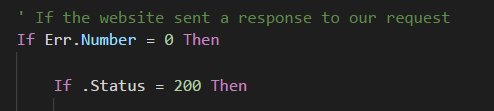

I can check the site responded by checking that no error occurred (Err.Number = 0)

It's possible for the site to send a valid response, but still not send you what you were expecting. Perhaps the page you requested is forbidden, or the web server encountered an error.

So I also need to check the Status of the response is 200, which means everything is OK. Check other HTTP status codes you may encounter.

If the site sucessfully returned the webpage I can now store that page in my HTML variable

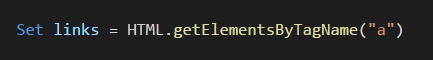

To find the links I am after I can search through the webpage for <a> tags. Web pages consist of lots of HTML elements created by these tags. Along with CSS and JavaScript, HTML forms the core of almost all web pages.

If we look at the source code of our home page again we see that the link to our YouTube channel looks like this

Notice the start of the <a tag at the beginning of the line.

I can get a list of all links on the page

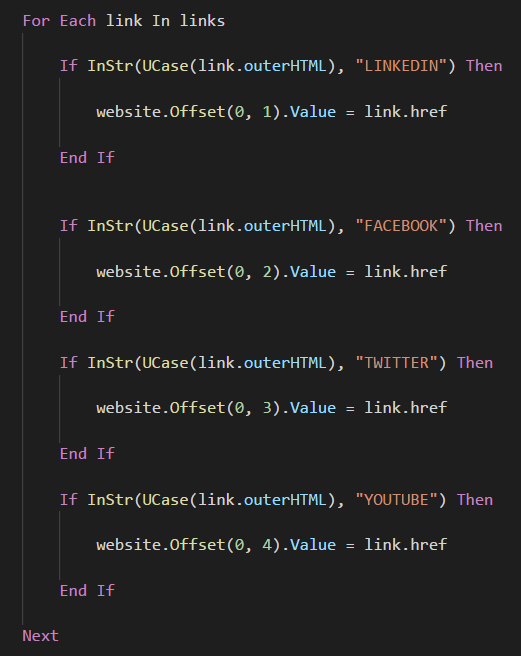

Now it's just a case of looping through all the links to find the ones I want, and writing those out to the sheet.

Hello everyone, my name is Maurizio

I am writing to you because: I have carefully studied your Web Scraping With VBA project on how to get links from a web page.

And so far everything is ok

But if I wanted to modify your project in such a way that it gets me some text extracted from the web page

My site in question is:

https://www.worldweatheronline.com/San-Giusto-Canavese-Weather/Piemonte/it.aspx

And the data I would like to get would be this (Set OggCol (0) = .getElementsByClassName (“col-sm-12”))

Which in turn is displayed by this code:

(Sheets (“Sheet1”). Cells (1, 1) = OggCol (0) .innerText)

But if I add this system in your listing, my data is not displayed

Why and how can I solve this problem?

Thanks Greetings from Maurizio

Hi Maurizio,

Please start a topic on the forum and post your file there so we can see everything you’ve done and we don’t have to recreate the code.

Regards

Phil

HI, please, how could use VBA Selenium Basic to manage an existing Chrome webpage? I don’t want vba open a new windows; i’d like that the webpage in Chrome already existing was managed by vba. thank you very much NIcola

Hi Nicola,

I’m not sure if that can be done. Doing a web search shows various results that suggest it may be possible with Selenium, but it appears to require the use of another language like Python or Java. I didn’t see anything about Selenium Basic and VBA.

Regards

Phil

Hi, thank you very much for this working sample !!

Is it possible to change the code in a way it writes the full source-code of a website to the next column in the worksheet?

Thanks in advance.

Hi Jan,

When you say full source code, do you mean the HTML for the entire page? If so, then yes. When the website responds you can dump the whole page like this:

Range(“A1”).Value = http.responseText

Regards

Phil

Hello! Where is the more complex example using the Inspector built in option?

I couldn’t find it.

Thank you

Hi Vincent,

At the end of the article, you have 2 more links, see the “More Web Scraping Posts” section.

Cheers,

Catalin

Hi, i need to scrap prices from hundreds of URL (same domain). In html is price in tag

The price is 55,00 and i need parce only them from each URL. The text between ” ” is different in each URL. Is it possible to edit your .xlsm file workbook to scraping price?

in tag meta itemProp=”description”

In this tag is price and some other text. I need to parce only price.

Hi Juraj,

Yes I imagine this can be done but I’ll need more information.

Please post your question on our forum and supply info like the website you want to get prices from.

Regards

Phil

I downloaded the example workbook, placed the url in a 24

Went to Developer VBA, Tools/References/

Highlighted Microsoft HTML Object Library, then clicked OK

https://www.myonlinetraininghub.com/

There was no output

Ok. I missed the Blue Button on Sheet 1 of the downloaded workbook ;(

However the link to your own site https://www.myonlinetraininghub.com returns blanks

Hi Ron,

Our firewall may be blocking you. If this is the case, the firewall will issue a response to the VBA code, so as the code is written it will not take that as an error.

You can examine what response you are getting by uncommenting some of the Debug.Print statements I left in the code for exactly this purpose.

Find the code

‘Debug.Print Len(.responseText)

‘Debug.Print .responseText

and remove the ‘ from the start of each line.

Now if you step through the code from the VBA editor (using F8) you can see what the response text is, it’ll be printed to the Immediate window.

This article explains more about debugging vba.

Cheers

Phil

How to get web page load time in Excel using macros. Please help

Hi Ananya

You have to store the current date-time before .send command, like: Startime=NOW()

Then, before reading the response .responseText, compare the current time with the stored time: Debug.Print (Now()-StartTime)/24/60

Is it possible to download documents Directly from ERP Softwares ( like SAP , Infor ln )

In that softwares we need to login , opens a special ( required ) sessions, gives required input , selects the devices & to click print option. Later that only back ground code will execute and we get the data.

All of these things can we do directly from excel ( using VBA )

Hi Sudheer,

I would use Power Query to get data from external databases as it can handle the login credentials where there are built in connectors. For example, Power Query has a connector for SAP HANA databases. You can also use ODBC drivers with Power Query to get data from databases that don’t have a built in connector. You can find out more about Power Query on my Power Query course page here.

Mynda

Thanks for providing this content.

However it doesn’t work for me, without changing anything in the downloaded excel, the sites return ‘Error with website address’, even though they have

at the start??

Could be issues with my firewall / web security at work.

Hi Will,

Can you upload a sample of your code? Use our forum to upload.

How to copy paste Table from Website ? ichange get element by name to “TR” but still not working

Thanks

If you want to get tables from the HTML you need to look for “table”.

If you look for “tr” you will get all rows from all tables but you may not know what row belongs to what table.

Phil

I am using iMac. I tried to set reference to “Microsoft HTML object Library”. I could not find it. Is it available for iMac or is it available only windows based systems ?

Hi Venu,

ServerXMLHTTP is included as part of the Microsoft XML Parser. The documentation is a bit out of date but does seem to indicate that it can only be installed on Windows.

https://support.microsoft.com/en-au/help/290761/frequently-asked-questions-about-serverxmlhttp

You can use Python to do web scraping on a Mac if that is an option for you?

Regards

Phil

Always get a timeout error. Any idea why that may be?

A timeout from the website you are trying to access?

What is the HTTP status code returned?

Can you access the website by copying/pasting the EXACT URL you are using in VBA into your browser?

Remember the URL must be properly formatted in VBA

If you open topic on the forum and supply your file I can have a look at it for you.

Regards

Phil